As an intern for CS4IL, I volunteered to build an all-in-one online platform for Illinois' inaugural state-wide coding competition.

Having participated in national competitions myself, I've seen firsthand how these platforms have historically fallen short: school-issued devices struggle with advanced development work, collaboration features are nonexistent or not pedagogically effective, and cutting-edge tools like AI are rarely integrated in ways that equitably support students who want to use them responsibly. I wanted Foundry to address all of these challenges.

Features

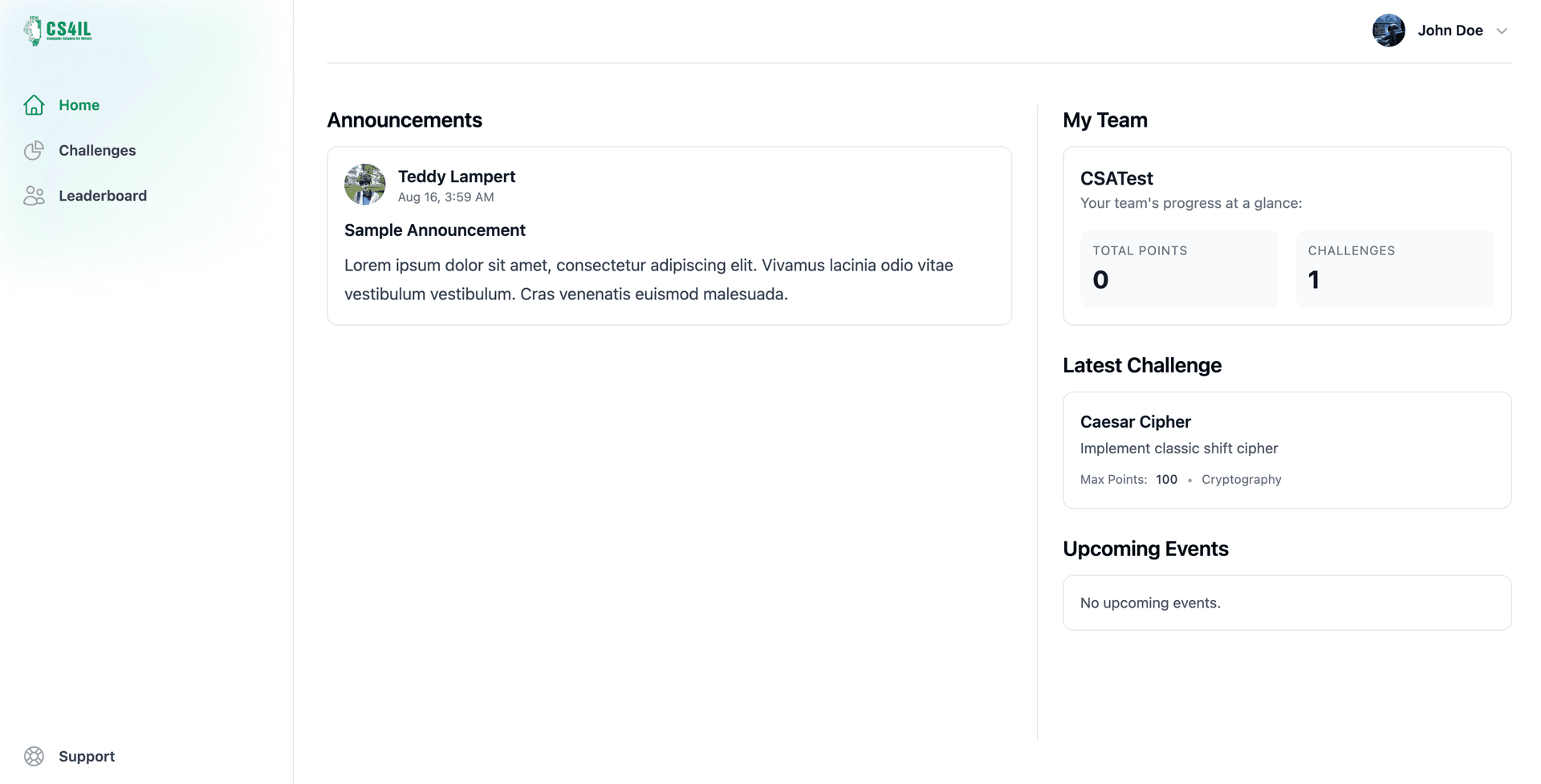

Split into two sections: the portal (the management and registration side), and the IDE (the embedded code editor).

Portal

- Create your account and login with school SSO platforms like Google, Clever, Microsoft, or Classlink

- Register a new team as an advisor or participant and invite other students to join via link or 6-digit code

- View announcements, tutorial videos, and other informational/educational resources posted by event admins

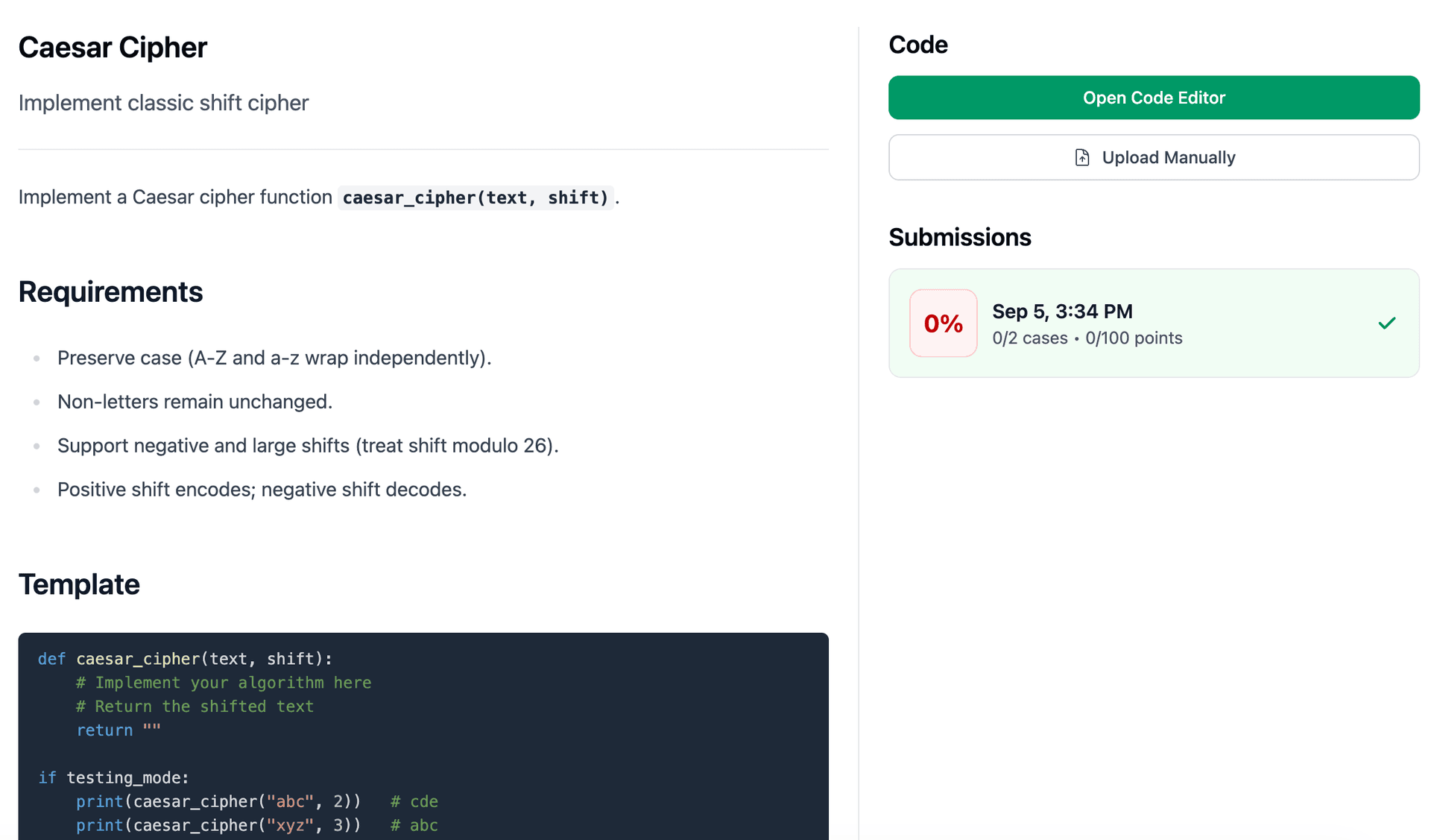

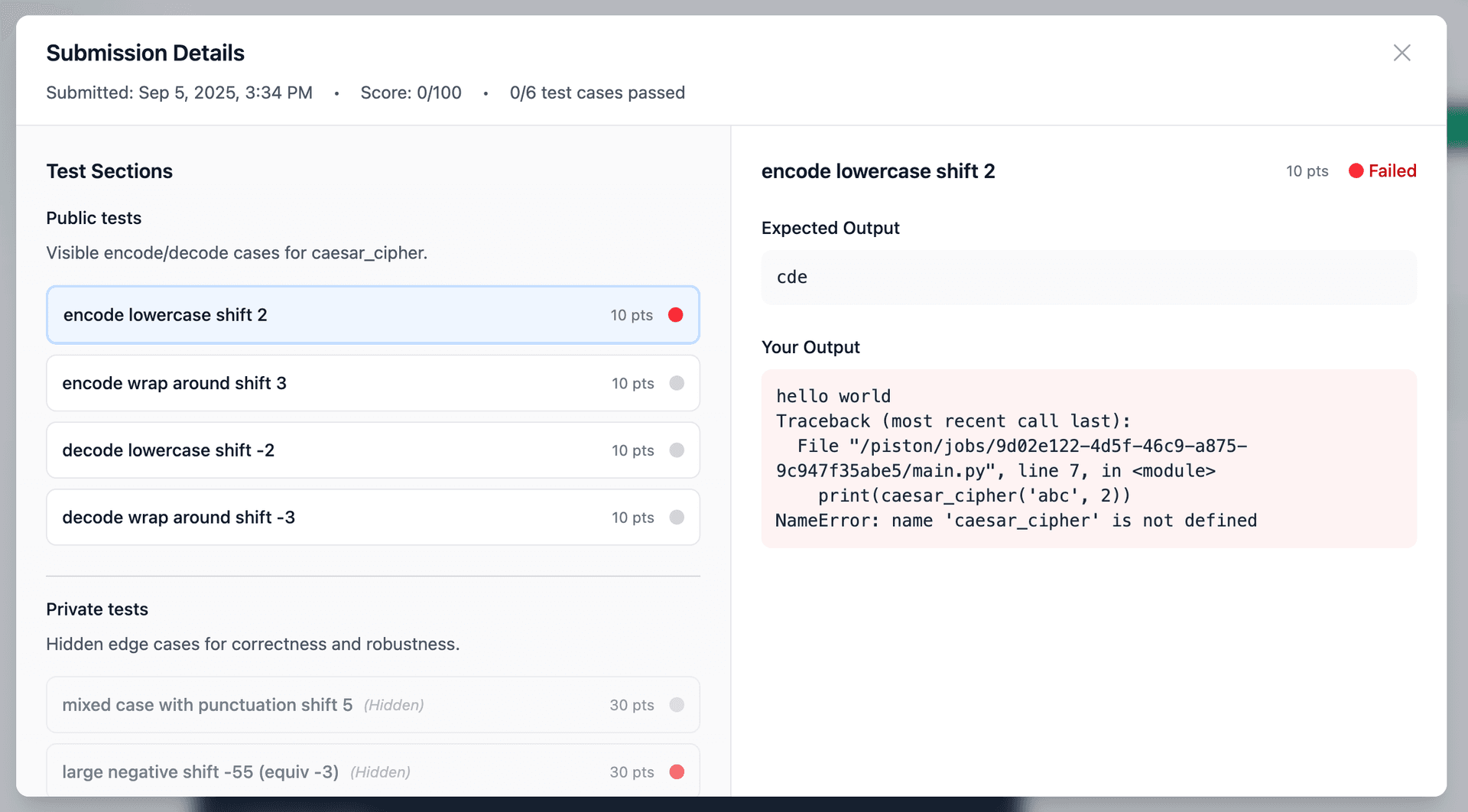

- Upload solutions to problems and view autograding results (including errors and test cases)

- Check your place on the leaderboard to see how you compare to other teams

- Sign up for in-person events and opportunities (like workshops and meets)

- Create a website for your team with custom content and branding

- Effortlessly collect donations with CS4IL fiscal sponsorship and issue real, virtual credit cards to spend it

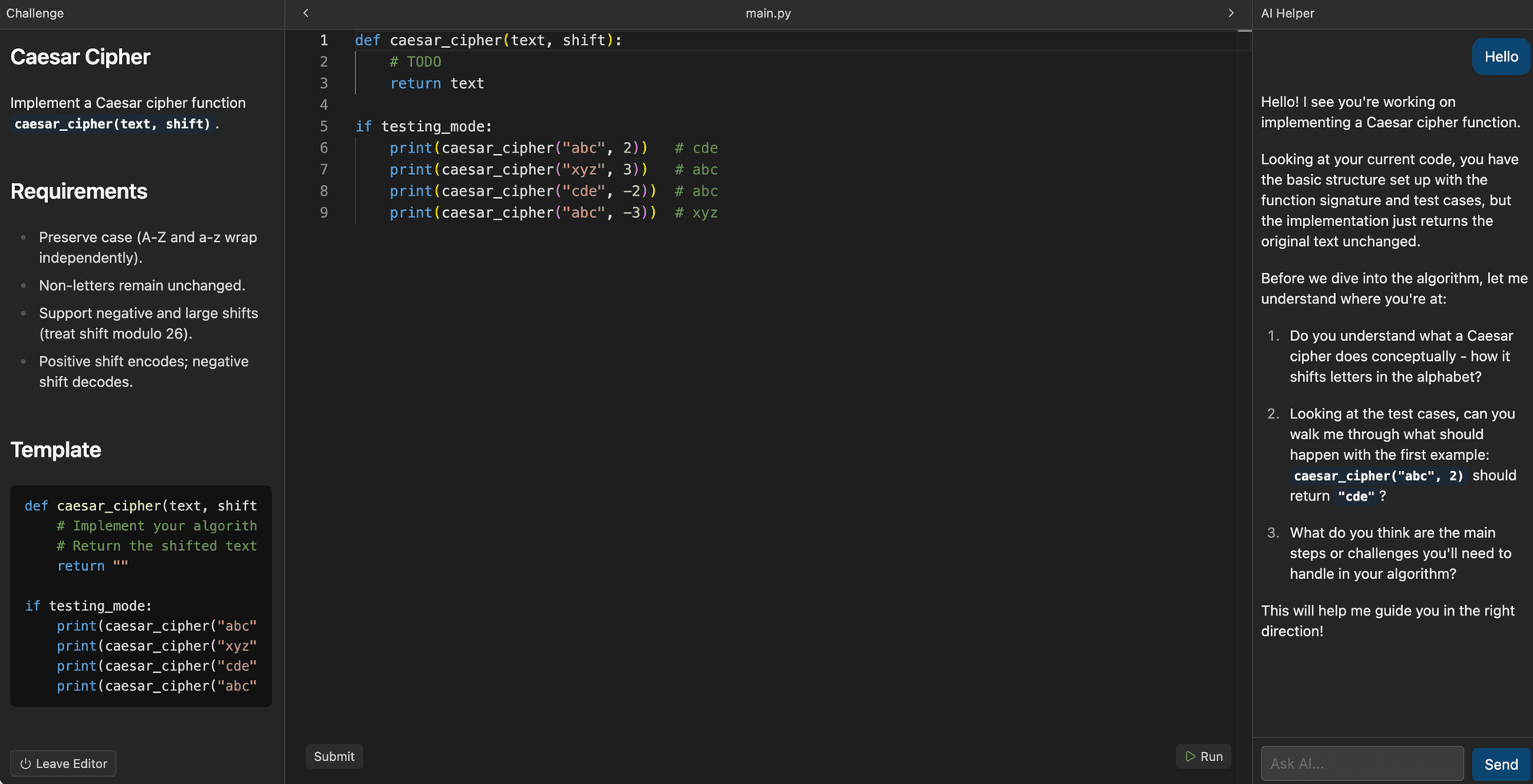

IDE

- Runs entirely in your browser on entry-level hardware including iPads and Chromebooks

- Access the full computing power and file system of a Linux cloud VM

- Edit files in real-time with Google Docs-like collaboration

- Talk to a pedagogically-grounded AI tutor in the sidebar informed on the problem and your current solution

- Submit solutions directly to the portal

Engineering

My top priority in designing this website's infrastructure was reliability, which led me to choose a fully serverless architecture on Cloudflare's Workers Platform. This ended up being a great choice because it let me scale features without having to worry about horizontal scaling and load balancing, and I had very limited time to develop Foundry. It also made the codebase more accessible to a wide range of future contributors and maintainers, which was a goal of mine from the start.

I chose to build the site in React with Vite and TypeScript. React is great given how stateful many aspects of the site are; meanwhile, using Vite over a framework like Next.js gives me more granular control over integration with other libraries. The code editor uses Monaco for VSCode-style editing, with full syntax highlighting and autocomplete. I also chose Neon for PostgreSQL hosting, which I highly recommend given how well it keeps up with serverless architectures and how great its DX is!

One of the most interesting challenges when building Foundry was real-time collaboration given serverless constraints and the potential scale (project requirements specified 50,000 concurrent editors—5 per team workspace—as a minimum scalability metric). To tackle this, I used Cloudflare's Durable Objects, serving as a single source of truth for each team's workspace state while also implementing Y.js conflict resolution logic, role-based access control, and several bespoke features. This closely resembled my approach to Tabula, except Cloudflare's environment more closely resembled a traditional WebSocket approach and certainly feels more easily adaptable to uncharted use cases (Monaco has first-class collaboration support, but it's nothing compared to Tiptap's). Also, Durable Objects very cleanly handle concurrency and scale because I can simply spin up a new instance for each team and let it go inactive when not in use.

For the AI tutor, I knew I wanted to use Anthropic's Claude API because of their models' enterprise-ready safety features and leading coding capabilities. I also wanted it to genuinely help students learn, not just give them answers. So, the tutor has access to the problem description, the student's current code, and any error messages from their last submission. But it also has a system prompt that guides how it supports students and authoritatively defines limitations it should respect. Because the system is student-facing, I also implemented a second AI "judge" (running OpenAI's GPT-4o) that evaluates the first AI response and hides it if it broke any rules or was otherwise incorrect.

Autograding was complicated because I wanted to support multiple programming languages and frameworks, while also making it easy for organizers to define test cases. While many comparable systems might require writing code to support each, I decided to create a more standardized, language-agnostic type for test cases:

type TestCase = {

// String: stdin

// Object: positional arguments to the main function

input: { type: string; value: string }[] | string

// String: passes if output matches exactly

// Function: passes if returns true when passed the output

expectation: string | ((output: string) => boolean)

}

// For example:

{

"input": [

{ "type": "number", "value": "1" },

{ "type": "number", "value": "2" },

],

"expectation": (output) => output.includes("3")

}Then, for each supported language, I had a function to convert that input array into code that executes the main function (or another if configured at the challenge-level) with positional arguments. For example, the above code for a Python submission would become:

main(1, 2)Another option might have been using stdin/stdout, which I do support if you simply pass one string to the input; this duality offers flexibility for organizers and the ability to teach students about both methods of output.

Autograding also required strong sandboxing. This is the one component that did not run serverless, but that I did build to autoscale using AWS Elastic Beanstalk. Each submission runs in an isolated Docker container with strict resource limits and network disconnection. Test cases execute with timeouts, and the system captures stdin, stdout, stderr, exit codes, and also evaluated output of test case runners to grade and provide detailed feedback. Grading jobs and results are synchronized to and from these separate nodes through the main database for indefinite retention and portal display. To distribute load cleanly, each grading node polls the same shared database for new jobs and atomically claims the next available submission with PostgreSQL row-level locking. If E2B and Cloudflare's Sandbox API, popularized by code-executing AI agents, were in GA at the time of Foundry's development, I might have chosen them in order to not reinvent the wheel (although, then, I wouldn't have learned as much as I did about Docker and AWS's API!).

The final technical challenge was integrating with Stripe for card issuing, since most coding competition platforms don't handle financial transactions—and especially not fiscal sponsorship. This was another case where I had to read a lot about PostgreSQL's atomic transaction guarantees to ensure that even during peak usage or purposeful abuse, the system's ledger remained consistent and accurate. It would be very bad for CS4IL's finances to approve a transaction via the Stripe webhook handler from a team with insufficient funds, for example. I also had to implementend-to-end encryption using AES to display sensitive card details to authorized team members.

Extensive load testing and security scanning with Burp Suite and OWASP ZAP went into ensuring that Foundry was production-ready. However, with an audience of computer science experts, I knew this site could benefit greatly from an official bug reporting and security advisory program, which I implemented alongside lots of other analytics with the amazing Posthog.

AI Pedagogy

Following in the footsteps of Harvard's David Malan through CS50, Foundry's editor included a sidebar AI assistant prompted to be a Socratic tutor. Its goal is to help students develop their problem-solving skills without giving them the "answer" or writing code for them, offering a way to use LLMs responsibly and productively in an educational setting. How did we do this? We developed a long, detailed system prompt that adapts CS-specific and general pedagogical advice from a wide range of experienced teachers and professors. We also took inspiration from how industry-led tools like OpenAI's Study Mode work.

Reflection

I am very proud of how I built Foundry; it's the exact platform that I wish I had for competitive programming and, long-ago, some beginner hackathons. I also think the engineering is as bulletproof as I could have gotten it given the limited time and resources I had. I truly believe it is one of my most well-architected projects to date.

On the business side, I'm also excited about the possibility of scaling Foundry to more organizations. CS4IL had many platforms to consider in their search, none of which ultimately fit their needs perfectly. I think many similar competitions are in the same spot, and I think Foundry could be a great fit for them.

Screenshots